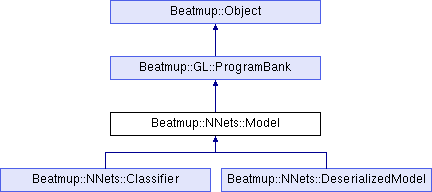

Neural net model. More...

#include <model.h>

Classes | |

| struct | Connection |

| Connection descriptor. More... | |

| struct | UserOutput |

| A user-defined output descriptor. More... | |

Public Member Functions | |

| Model (Context &context, std::initializer_list< AbstractOperation * > ops) | |

| Instantiates a model from a list of operations interconnecting them in a feedforward fashion. More... | |

| Model (Context &context) | |

| Instantiates an empty model. More... | |

| ~Model () | |

| void | append (AbstractOperation *newOp, bool connect=false) |

| Adds a new operation to the model. More... | |

| void | append (std::initializer_list< AbstractOperation * > newOps, bool connect=false) |

| Adds new operations to the model. More... | |

| void | addOperation (const std::string &opName, AbstractOperation *newOp) |

| Adds a new operation to the model before another operation in the execution order. More... | |

| void | addOperation (const AbstractOperation &operation, AbstractOperation *newOp) |

| void | addConnection (const std::string &sourceOpName, const std::string &destOpName, int output=0, int input=0, int shuffle=0) |

| Adds a connection between two given ops. More... | |

| void | addOutput (const std::string &operation, int output=0) |

| Enables reading output data from the model memory through getOutputData(). More... | |

| void | addOutput (const AbstractOperation &operation, int output=0) |

| const float * | getOutputData (size_t &numSamples, const std::string &operation, int output=0) const |

| Reads data from the model memory. More... | |

| const float * | getOutputData (size_t &numSamples, const AbstractOperation &operation, int output=0) const |

| virtual void | prepare (GraphicPipeline &gpu, ChunkCollection &data) |

| Prepares all operations: reads the model data from chunks and builds GPU programs. More... | |

| bool | isReady () const |

| void | execute (TaskThread &thread, GraphicPipeline *gpu) |

| Runs the inference. More... | |

| bool | isOperationInModel (const AbstractOperation &operation) const |

| Checks if a specific operation makes part of the model. More... | |

| AbstractOperation & | getFirstOperation () |

| AbstractOperation & | getLastOperation () |

| const AbstractOperation & | getFirstOperation () const |

| const AbstractOperation & | getLastOperation () const |

| size_t | getNumberOfOperations () const |

| template<class OperationClass = AbstractOperation> | |

| OperationClass & | getOperation (const std::string &operationName) |

| Retrieves an operation by its name. More... | |

| const ProgressTracking & | getPreparingProgress () const |

| Returns model preparation progress tracking. More... | |

| const ProgressTracking & | getInferenceProgress () const |

| Returns inference progress tracking. More... | |

| unsigned long | countMultiplyAdds () const |

| Provides an estimation of the number of multiply-adds characterizing the model complexity. More... | |

| unsigned long | countTexelFetches () const |

| Provides an estimation of the total number of texels fetched by all the operations in the model per image. More... | |

| size_t | getMemorySize () const |

| Returns the amount of texture memory in bytes currently allocated by the model to run the inference. More... | |

| Listing | serialize () const |

| Returns serialized representation of the model as a Listing. More... | |

| std::string | serializeToString () const |

| Returns serialized representation of the model as a string. More... | |

| void | setProfiler (Profiler *profiler) |

| Attaches a profiler instance to meter the execution time per operation during the inference. More... | |

Public Member Functions inherited from Beatmup::GL::ProgramBank Public Member Functions inherited from Beatmup::GL::ProgramBank | |

| ProgramBank (Context &context) | |

| ~ProgramBank () | |

| GL::RenderingProgram * | operator() (GraphicPipeline &gpu, const std::string &code, bool enableExternalTextures=false) |

| Provides a program given a fragment shader source code. More... | |

| void | release (GraphicPipeline &gpu, GL::RenderingProgram *program) |

| Marks a program as unused any more. More... | |

Public Member Functions inherited from Beatmup::Object Public Member Functions inherited from Beatmup::Object | |

| virtual | ~Object () |

Protected Member Functions | |

| void | freeMemory () |

| Frees all allocated storages. More... | |

| Storage & | allocateStorage (GraphicPipeline &gpu, const Size size, bool forGpu=true, bool forCpu=false, const int pad=0, const int reservedChannels=0) |

| Allocates a new storage. More... | |

| Storage & | allocateFlatStorage (GraphicPipeline &gpu, const int size) |

| Allocates a new flat storage. More... | |

| GL::Vector & | allocateVector (GraphicPipeline &gpu, const int size) |

| Allocates a vector that can be used as operation input or output. More... | |

| InternalBitmap & | allocateTexture (GraphicPipeline &gpu, const Size size) |

| Allocates a texture that can be used as operation input or output. More... | |

| bool | isPreceding (const AbstractOperation &first, const AbstractOperation &second) const |

| Checks whether an operation goes before another operation in the model according the ops execution order. More... | |

| AbstractOperation * | operator[] (const std::string &operationName) |

| const AbstractOperation * | operator[] (const std::string &operationName) const |

| void | addConnection (AbstractOperation &source, AbstractOperation &dest, int output=0, int input=0, int shuffle=0) |

Protected Attributes | |

| std::vector< AbstractOperation * > | ops |

| model operations More... | |

| ProgressTracking | preparingProgress |

| model preparation progress More... | |

| ProgressTracking | inferenceProgress |

| inference progress More... | |

| bool | ready |

if true, ops are connected to each other and storages are allocated More... | |

Protected Attributes inherited from Beatmup::GL::ProgramBank Protected Attributes inherited from Beatmup::GL::ProgramBank | |

| Context & | context |

Private Attributes | |

| std::multimap< const AbstractOperation *, Connection > | connections |

| source operation => connection descriptor mapping More... | |

| std::multimap< const AbstractOperation *, UserOutput > | userOutputs |

| operation => user output mapping More... | |

| std::vector< Storage * > | storages |

| allocated storages used during the inference More... | |

| std::vector< GL::Vector * > | vectors |

| allocated vectors used during the inference More... | |

| std::vector< InternalBitmap * > | textures |

| allocated images used during the inference More... | |

| Profiler * | profiler |

| pointer to a Profiler attached to the model More... | |

Detailed Description

Neural net model.

Contains a list of operations and programmatically defined interconnections between them using addConnection(). Enables access to the model memory at any point in the model through addOutput() and getOutputData(). The memory needed to store internal data during the inference is allocated automatically; storages are reused when possible. The inference of a Model is performed by InferenceTask.

Constructor & Destructor Documentation

◆ Model() [1/2]

| Model::Model | ( | Context & | context, |

| std::initializer_list< AbstractOperation * > | ops | ||

| ) |

Instantiates a model from a list of operations interconnecting them in a feedforward fashion.

The first output of every operation is connected to the first input of its successor. Optional connections may be added after model creation.

- Parameters

-

[in,out] context A context instance [in] ops Operations given in the execution order. The Model does not take ownership of them.

Definition at line 27 of file model.cpp.

◆ Model() [2/2]

| Model::Model | ( | Context & | context | ) |

Instantiates an empty model.

- Parameters

-

[in,out] context A context instance used to store internal resources needed for inference

Definition at line 38 of file model.cpp.

◆ ~Model()

| Model::~Model | ( | ) |

Definition at line 40 of file model.cpp.

Member Function Documentation

◆ freeMemory()

|

protected |

Frees all allocated storages.

Definition at line 415 of file model.cpp.

◆ allocateStorage()

|

protected |

Allocates a new storage.

Its views might be used as operations inputs and outputs. The storage is destroyed together with the model.

- Parameters

-

[in,out] gpu A graphic pipeline instance [in] size The storage size (width, height, number of channels) [in] forGpu Allocate for the use on GPU [in] forCpu Allocate for the use on CPU [in] pad Storage padding: number of pixels added on both sides along width and height of every channel [in] reservedChannels Number of additional channels that may be sampled together with the storage. This does not change the storage size, but impacts the way the channels are packed into the textures. It allows the storage to be sampled with other storages of a specific total depth in the same shader, if the addDepth is greater or equal to the total depth.

- Returns

- newly allocated storage.

Definition at line 428 of file model.cpp.

◆ allocateFlatStorage()

|

protected |

Allocates a new flat storage.

Its views are be used as operations inputs and outputs. Flat storages can be inputs of Dense layers. The storage is destroyed together with the model.

- Parameters

-

[in,out] gpu A graphic pipeline instance [in] size Number of samples in the storage

- Returns

- newly allocated storage.

Definition at line 439 of file model.cpp.

◆ allocateVector()

|

protected |

Allocates a vector that can be used as operation input or output.

Differently to flat storages, vectors store floating point data (GL ES 3.1 and higher) or 16-bit signed fixed point values with 8 bits fractional part (GL ES 2.0).

- Parameters

-

[in,out] gpu A graphic pipeline instance [in] size Number of samples in the vector

Definition at line 447 of file model.cpp.

◆ allocateTexture()

|

protected |

Allocates a texture that can be used as operation input or output.

- Parameters

-

[in,out] gpu A graphic pipeline instance [in] size Image size. The depth can be 1, 3 or 4 channels.

Definition at line 460 of file model.cpp.

◆ isPreceding()

|

protected |

Checks whether an operation goes before another operation in the model according the ops execution order.

- Parameters

-

[in] first The first operation (expected to be executed earlier) [in] second The first operation (expected to be executed later)

- Returns

trueif both operations are in the model, and the first one is executed before the second one,falseotherwise.

◆ operator[]() [1/2]

|

protected |

◆ operator[]() [2/2]

|

protected |

◆ addConnection() [1/2]

|

protected |

Definition at line 91 of file model.cpp.

◆ append() [1/2]

| void Model::append | ( | AbstractOperation * | newOp, |

| bool | connect = false |

||

| ) |

Adds a new operation to the model.

The operation is added to the end of the operations list. The execution order corresponds to the addition order. The Model does not takes ownership of the passed pointer.

- Parameters

-

[in] newOp The new operation [in] connect If true, the main operation input (#0) is connected to the main output (#0) of the last operation

Definition at line 47 of file model.cpp.

◆ append() [2/2]

| void Model::append | ( | std::initializer_list< AbstractOperation * > | newOps, |

| bool | connect = false |

||

| ) |

Adds new operations to the model.

The operations are added to the end of the operations list. The execution order corresponds to the addition order. The Model does not takes ownership of the passed pointer.

- Parameters

-

[in] newOps The new operations [in] connect If true, the main input (#0) of every operation is connected to the main output (#0) of the preceding operation

Definition at line 62 of file model.cpp.

◆ addOperation() [1/2]

| void Model::addOperation | ( | const std::string & | opName, |

| AbstractOperation * | newOp | ||

| ) |

Adds a new operation to the model before another operation in the execution order.

The Model does not takes ownership of the passed pointer. The new operation is not automatically connected to other operations.

- Parameters

-

[in] opName Name of the operation the new operation is inserted before [in] newOp The new operation

Definition at line 68 of file model.cpp.

◆ addOperation() [2/2]

| void Model::addOperation | ( | const AbstractOperation & | operation, |

| AbstractOperation * | newOp | ||

| ) |

◆ addConnection() [2/2]

| void Model::addConnection | ( | const std::string & | sourceOpName, |

| const std::string & | destOpName, | ||

| int | output = 0, |

||

| int | input = 0, |

||

| int | shuffle = 0 |

||

| ) |

Adds a connection between two given ops.

- Parameters

Definition at line 84 of file model.cpp.

◆ addOutput() [1/2]

| void Model::addOutput | ( | const std::string & | operation, |

| int | output = 0 |

||

| ) |

Enables reading output data from the model memory through getOutputData().

A given operation output is connected to a storage that might be accessed by the application after the run.

- Parameters

-

[in] operation Name of the operation or the operation itself to get data from [in] output The operation output index

Definition at line 101 of file model.cpp.

◆ addOutput() [2/2]

| void Model::addOutput | ( | const AbstractOperation & | operation, |

| int | output = 0 |

||

| ) |

Definition at line 113 of file model.cpp.

◆ getOutputData() [1/2]

| const float * Model::getOutputData | ( | size_t & | numSamples, |

| const std::string & | operation, | ||

| int | output = 0 |

||

| ) | const |

Reads data from the model memory.

addOutput() is needed to be called first in order to enable reading the data. Otherwise null is returned.

- Parameters

-

[out] numSamples Returns number of samples in the pointed data buffer [in] operation Name of the operation or the operation itself to get data from [in] output The operation output index

- Returns

- pointer to the data stored as a 3D array of (height, width, channels) layout, or null.

Definition at line 125 of file model.cpp.

◆ getOutputData() [2/2]

| const float * Model::getOutputData | ( | size_t & | numSamples, |

| const AbstractOperation & | operation, | ||

| int | output = 0 |

||

| ) | const |

◆ prepare()

|

virtual |

Prepares all operations: reads the model data from chunks and builds GPU programs.

The inputs of the model needed to be provided. Preparation progress is tracked by a ProgressTracking instance (getPreparingProgress()).

- Parameters

-

[in,out] gpu A graphic pipeline instance [in] data ChunkCollection containing the model data

Definition at line 143 of file model.cpp.

◆ isReady()

|

inline |

◆ execute()

| void Model::execute | ( | TaskThread & | thread, |

| GraphicPipeline * | gpu | ||

| ) |

Runs the inference.

- Parameters

-

[in,out] thread Task thread instance [in,out] gpu A graphic pipeline

Definition at line 339 of file model.cpp.

◆ isOperationInModel()

| bool Model::isOperationInModel | ( | const AbstractOperation & | operation | ) | const |

◆ getFirstOperation() [1/2]

|

inline |

◆ getLastOperation() [1/2]

|

inline |

◆ getFirstOperation() [2/2]

|

inline |

◆ getLastOperation() [2/2]

|

inline |

◆ getNumberOfOperations()

|

inline |

◆ getOperation()

|

inline |

◆ getPreparingProgress()

|

inline |

◆ getInferenceProgress()

|

inline |

◆ countMultiplyAdds()

| unsigned long Model::countMultiplyAdds | ( | ) | const |

Provides an estimation of the number of multiply-adds characterizing the model complexity.

Queries the number of multiply-adds of every operation of the model and sums them up.

◆ countTexelFetches()

| unsigned long Model::countTexelFetches | ( | ) | const |

◆ getMemorySize()

| size_t Model::getMemorySize | ( | ) | const |

Returns the amount of texture memory in bytes currently allocated by the model to run the inference.

When the model is ready to run, this represents the size of the memory needed to store internal data during the inference. The resulting value does not include the size of GLSL shaders binaries stored in GPU memory which can be significant.

◆ serialize()

| Listing Beatmup::NNets::Model::serialize | ( | ) | const |

Returns serialized representation of the model as a Listing.

◆ serializeToString()

| std::string Model::serializeToString | ( | ) | const |

◆ setProfiler()

|

inline |

Member Data Documentation

◆ connections

|

private |

◆ userOutputs

|

private |

◆ storages

|

private |

◆ vectors

|

private |

◆ textures

|

private |

◆ profiler

|

private |

◆ ops

|

protected |

◆ preparingProgress

|

protected |

◆ inferenceProgress

|

protected |

◆ ready

|

protected |

The documentation for this class was generated from the following files: