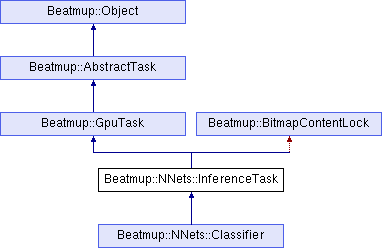

Task running inference of a Model. More...

#include <inference_task.h>

Public Member Functions | |

| InferenceTask (Model &model, ChunkCollection &data) | |

| void | connect (AbstractBitmap &image, AbstractOperation &operation, int inputIndex=0) |

| Connects an image to a specific operation input. More... | |

| void | connect (AbstractBitmap &image, const std::string &operation, int inputIndex=0) |

Public Member Functions inherited from Beatmup::Object Public Member Functions inherited from Beatmup::Object | |

| virtual | ~Object () |

Protected Attributes | |

| ChunkCollection & | data |

| Model & | model |

Private Member Functions | |

| void | beforeProcessing (ThreadIndex threadCount, ProcessingTarget target, GraphicPipeline *gpu) override |

| Instruction called before the task is executed. More... | |

| void | afterProcessing (ThreadIndex threadCount, GraphicPipeline *gpu, bool aborted) override |

| Instruction called after the task is executed. More... | |

| bool | processOnGPU (GraphicPipeline &gpu, TaskThread &thread) override |

| Executes the task on GPU. More... | |

| bool | process (TaskThread &thread) override |

| Executes the task on CPU within a given thread. More... | |

| ThreadIndex | getMaxThreads () const override |

| Gives the upper limint on the number of threads the task may be performed by. More... | |

Private Member Functions inherited from Beatmup::BitmapContentLock Private Member Functions inherited from Beatmup::BitmapContentLock | |

| BitmapContentLock () | |

| ~BitmapContentLock () | |

| void | readLock (GraphicPipeline *gpu, AbstractBitmap *bitmap, ProcessingTarget target) |

| Locks content of a bitmap for reading using a specific processing target device. More... | |

| void | writeLock (GraphicPipeline *gpu, AbstractBitmap *bitmap, ProcessingTarget target) |

| Locks content of a bitmap for writing using a specific processing target device. More... | |

| void | unlock (AbstractBitmap *bitmap) |

| Drops a lock to the bitmap. More... | |

| void | unlockAll () |

| Unlocks all the locked bitmaps unconditionally. More... | |

| template<const ProcessingTarget target> | |

| void | lock (GraphicPipeline *gpu, AbstractBitmap *input, AbstractBitmap *output) |

| void | lock (GraphicPipeline *gpu, ProcessingTarget target, AbstractBitmap *input, AbstractBitmap *output) |

| template<const ProcessingTarget target> | |

| void | lock (GraphicPipeline *gpu, std::initializer_list< AbstractBitmap * > read, std::initializer_list< AbstractBitmap * > write) |

| template<typename ... Args> | |

| void | unlock (AbstractBitmap *first, Args ... others) |

Private Attributes | |

| std::map< std::pair< AbstractOperation *, int >, AbstractBitmap * > | inputImages |

Additional Inherited Members | |

Public Types inherited from Beatmup::AbstractTask Public Types inherited from Beatmup::AbstractTask | |

| enum class | TaskDeviceRequirement { CPU_ONLY , GPU_OR_CPU , GPU_ONLY } |

| Specifies which device (CPU and/or GPU) is used to run the task. More... | |

Static Public Member Functions inherited from Beatmup::AbstractTask Static Public Member Functions inherited from Beatmup::AbstractTask | |

| static ThreadIndex | validThreadCount (int number) |

| Valid thread count from a given integer value. More... | |

Detailed Description

Task running inference of a Model.

During the firs run of this task with a given model the shader programs are built and the memory is allocated. The subsequent runs are much faster.

Definition at line 33 of file inference_task.h.

Constructor & Destructor Documentation

◆ InferenceTask()

|

inline |

Definition at line 48 of file inference_task.h.

Member Function Documentation

◆ beforeProcessing()

|

overrideprivatevirtual |

Instruction called before the task is executed.

- Parameters

-

threadCount Number of threads used to perform the task target Device used to perform the task gpu A graphic pipeline instance; may be null.

Reimplemented from Beatmup::AbstractTask.

Definition at line 31 of file inference_task.cpp.

◆ afterProcessing()

|

overrideprivatevirtual |

Instruction called after the task is executed.

- Parameters

-

threadCount Number of threads used to perform the task gpu GPU to be used to execute the task; may be null. aborted trueif the task was aborted

Reimplemented from Beatmup::AbstractTask.

Definition at line 38 of file inference_task.cpp.

◆ processOnGPU()

|

overrideprivatevirtual |

Executes the task on GPU.

- Parameters

-

gpu graphic pipeline instance thread associated task execution context

- Returns

trueif the execution is finished correctly,falseotherwise

Reimplemented from Beatmup::AbstractTask.

Definition at line 45 of file inference_task.cpp.

◆ process()

|

overrideprivatevirtual |

Executes the task on CPU within a given thread.

Generally called by multiple threads.

- Parameters

-

thread associated task execution context

- Returns

trueif the execution is finished correctly,falseotherwise

Reimplemented from Beatmup::GpuTask.

Definition at line 51 of file inference_task.cpp.

◆ getMaxThreads()

|

inlineoverrideprivatevirtual |

Gives the upper limint on the number of threads the task may be performed by.

The actual number of threads running a specific task may be less or equal to the returned value, depending on the number of workers in ThreadPool running the task.

Reimplemented from Beatmup::GpuTask.

Definition at line 41 of file inference_task.h.

◆ connect() [1/2]

| void InferenceTask::connect | ( | AbstractBitmap & | image, |

| AbstractOperation & | operation, | ||

| int | inputIndex = 0 |

||

| ) |

Connects an image to a specific operation input.

Ensures the image content is up-to-date in GPU memory by the time the inference is run.

- Parameters

-

[in] image The image [in] operation The operation [in] inputIndex The input index of the operation

Definition at line 25 of file inference_task.cpp.

◆ connect() [2/2]

|

inline |

Definition at line 58 of file inference_task.h.

Member Data Documentation

◆ inputImages

|

private |

Definition at line 35 of file inference_task.h.

◆ data

|

protected |

Definition at line 44 of file inference_task.h.

◆ model

|

protected |

Definition at line 45 of file inference_task.h.

The documentation for this class was generated from the following files:

- core/nnets/inference_task.h

- core/nnets/inference_task.cpp